MGIM: Masked Geo-Inference for Land Parcels

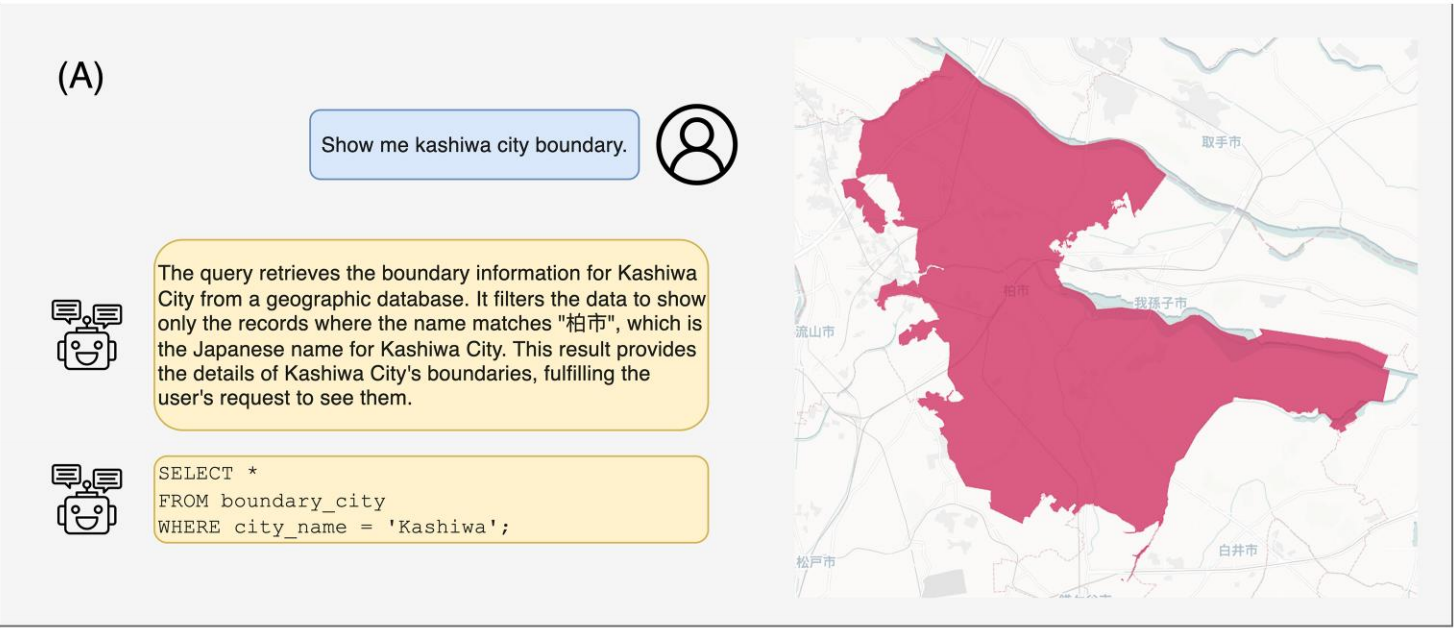

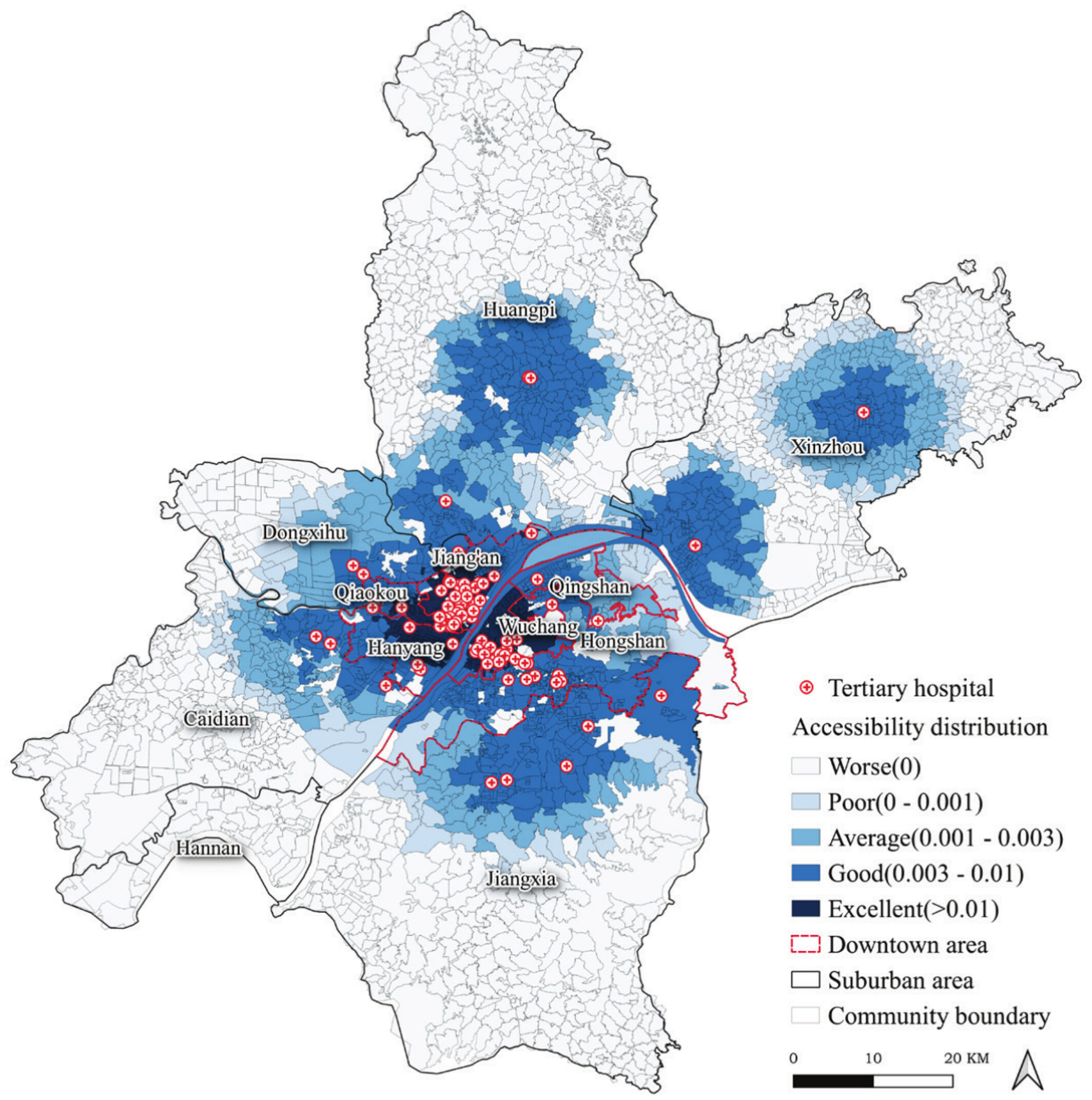

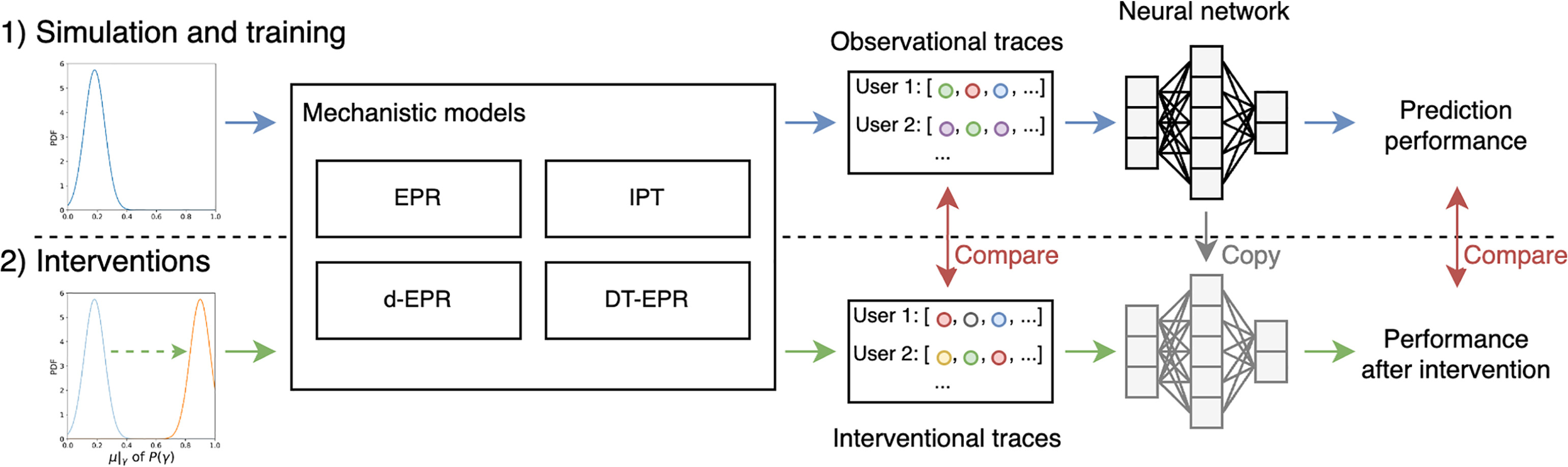

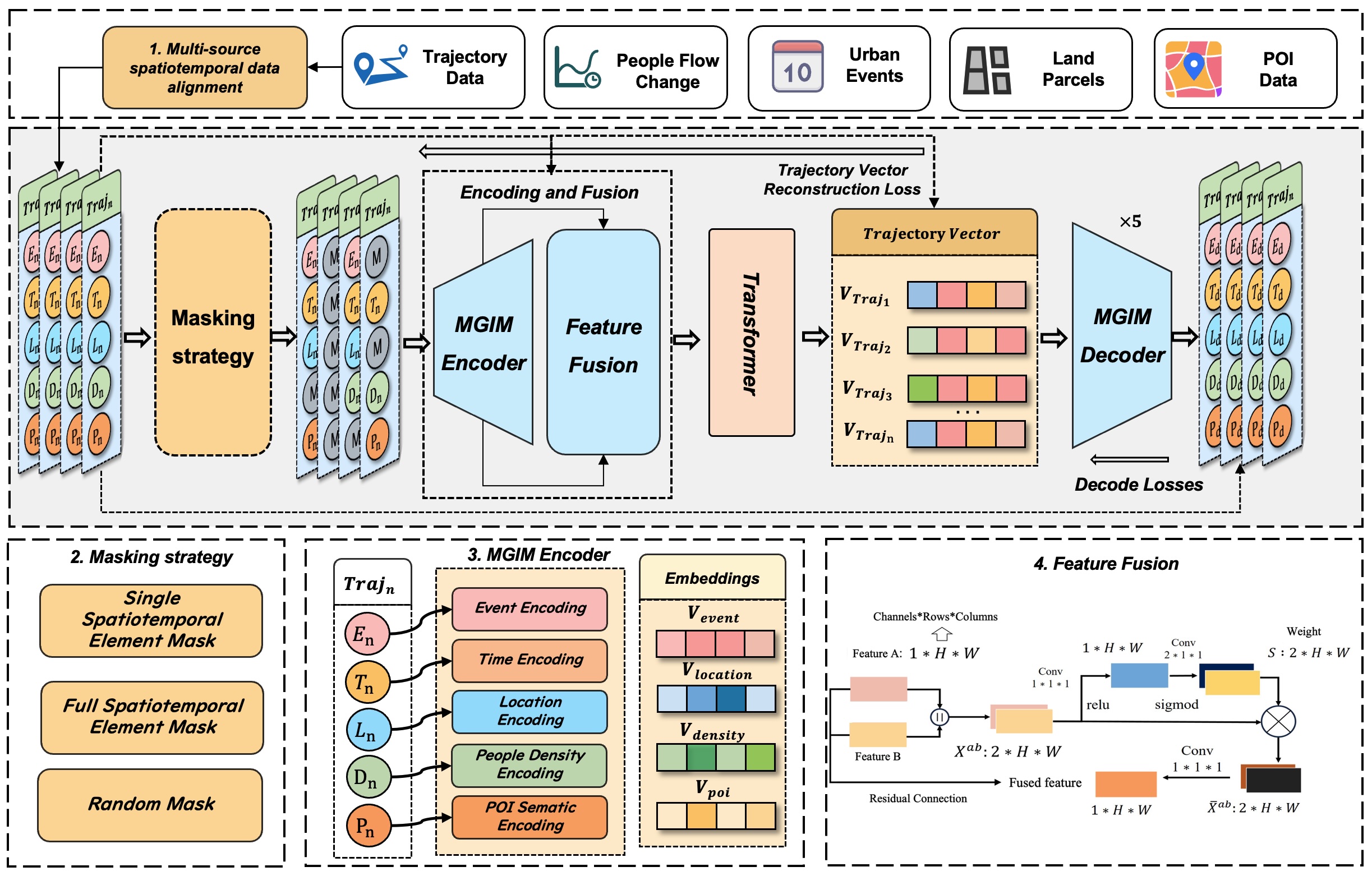

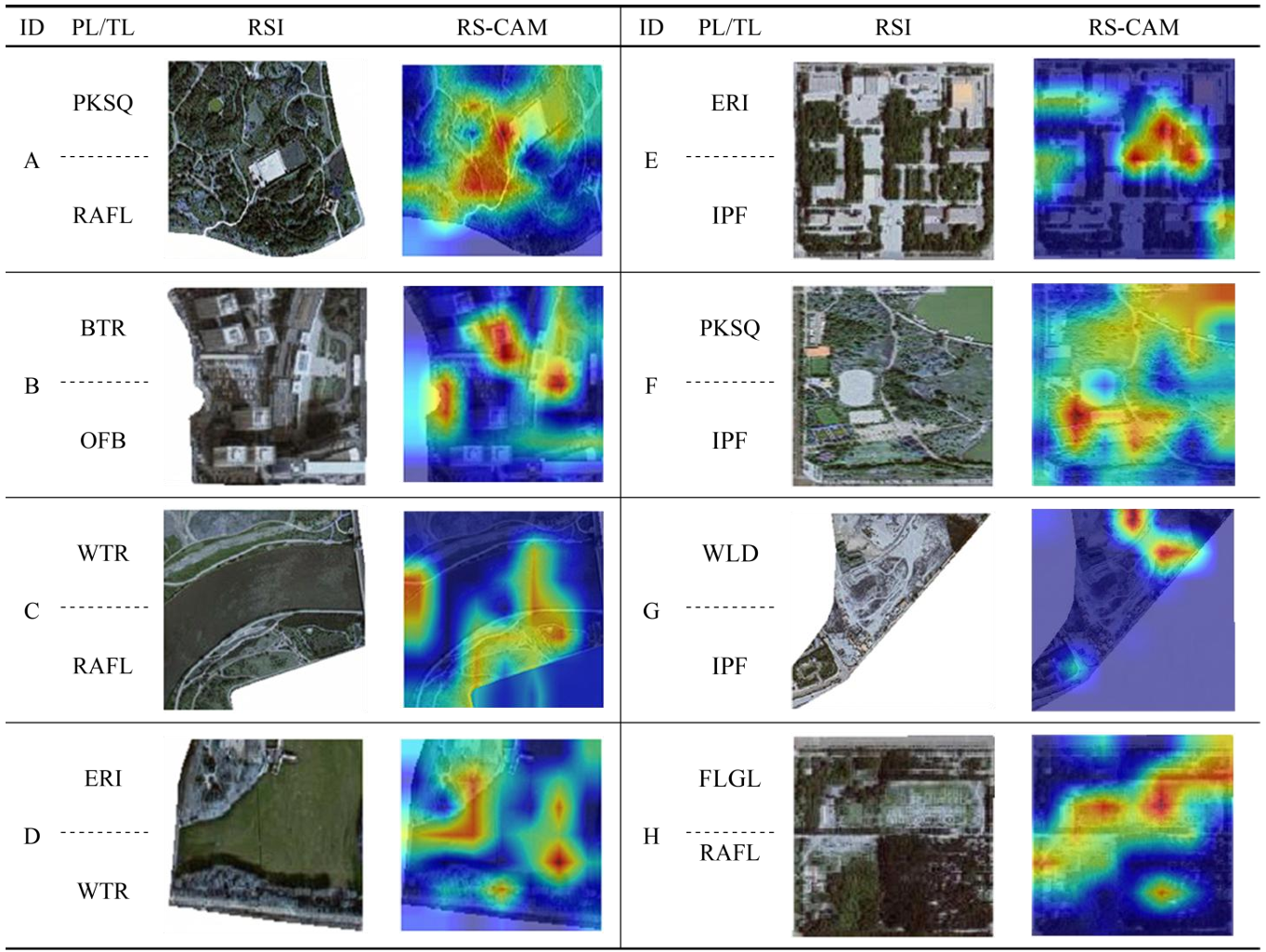

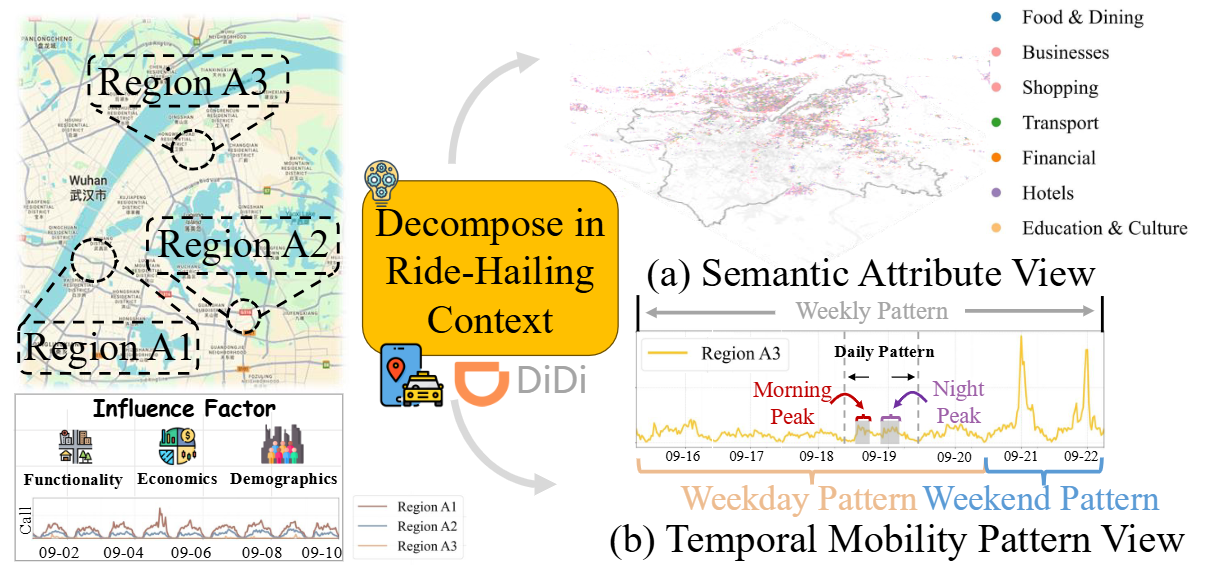

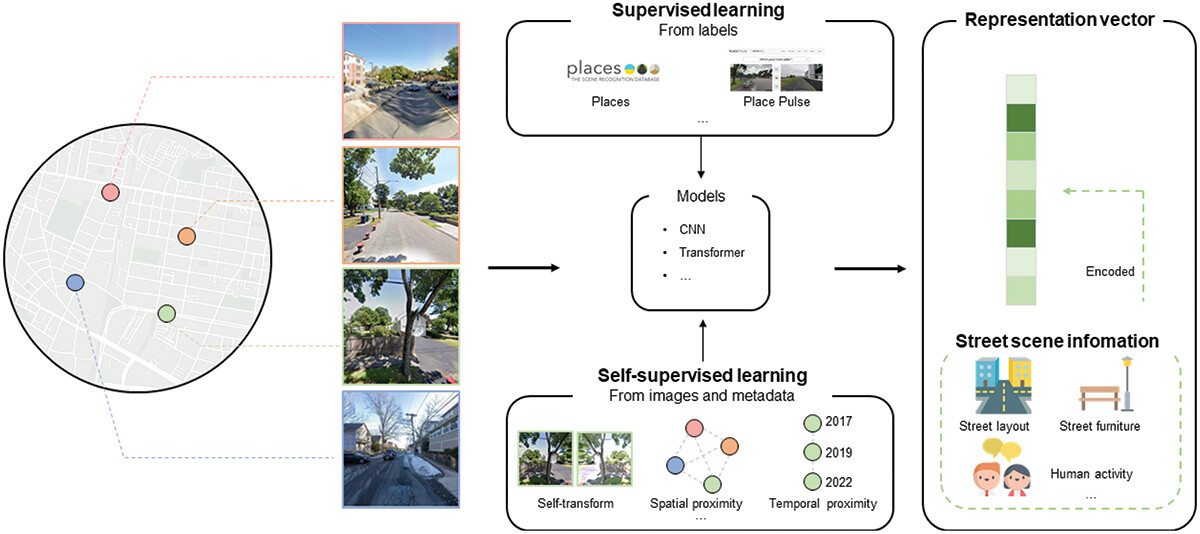

Effective modeling of spatio-temporal contexts to support geographic reasoning is essential for advancing Geospatial Artificial Intelligence. Inspired by masked language models, this paper introduces the Masked Geographical Information Model (MGIM), a novel self-supervised framework for learning context-aware representations from multi-source spatio-temporal data. The framework’s core innovations include a parcel-scale method for multi-source data fusion and a custom self-supervised masking strategy for diverse geographic elements. This integrated modeling approach enables the model to capture complex spatio-temporal relationships and achieve consistently strong performance across diverse geographic reasoning tasks, such as trajectory inference, people flow inference, event identification, and land parcel function analysis. MGIM accurately reasons from spatio-temporal contexts and dynamically adjusts inferences according to contextual changes. The visualization of attention mechanisms further illustrates MGIM’s capacity to construct contextually-aware representations and task-specific attention patterns analogous to natural language processing models. This study presents a new paradigm for general-purpose spatio-temporal modeling in real-world geographic scenarios, offering significant theoretical and practical value, and promising an effective solution for building a geographic foundation model.

.jpg)