Abstract

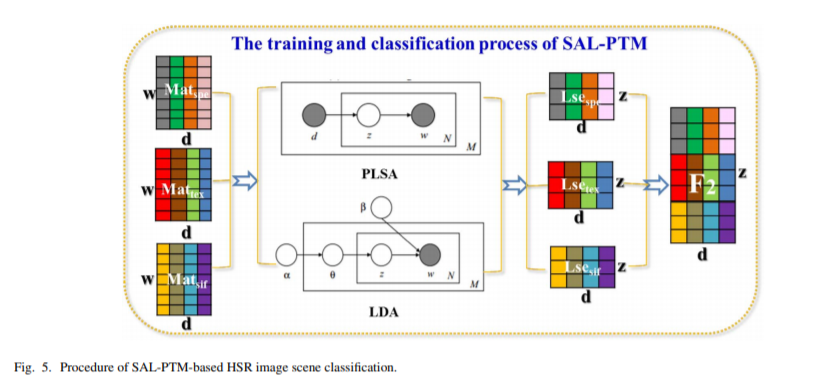

Scene classification has been proved to be an effective method for high spatial resolution (HSR) remote sensing image semantic interpretation. The probabilistic topic model (PTM) has been successfully applied to natural scenes by utilizing a single feature (e.g., the spectral feature); however, it is inadequate for HSR images due to the complex structure of the land-cover classes. Although several studies have investigated techniques that combine multiple features, the different features are usually quantized after simple concatenation (CAT-PTM). Unfortunately, due to the inadequate fusion capacity of k-means clustering, the words of the visual dictionary obtained by CAT-PTM are highly correlated. In this paper, a semantic allocation level (SAL) multifeature fusion strategy based on PTM, namely, SAL-PTM (SAL-pLSA and SAL-LDA) for HSR imagery is proposed. In SAL-PTM: 1) the complementary spectral, texture, and scale-invariant-featuretransform features are effectively combined; 2) the three features are extracted and quantized separately by k-means clustering, which can provide appropriate low-level feature descriptions for the semantic representations; and 3)the latent semantic allocations of the three features are captured separately by PTM, which follows the core idea of PTM-based scene classification. The probabilistic latent semantic analysis (pLSA) and latent Dirichlet allocation (LDA) models were compared to test the effect of different PTMs for HSR imagery. A U.S. Geological Survey data set and the UC Merced data set were utilized to evaluate SAL-PTM in comparison with the conventional methods. The experimental results confirmed that SAL-PTM is superior to the single-feature methods and CAT-PTM in the scene classification of HSR imagery.

Q.E.D.