Abstract

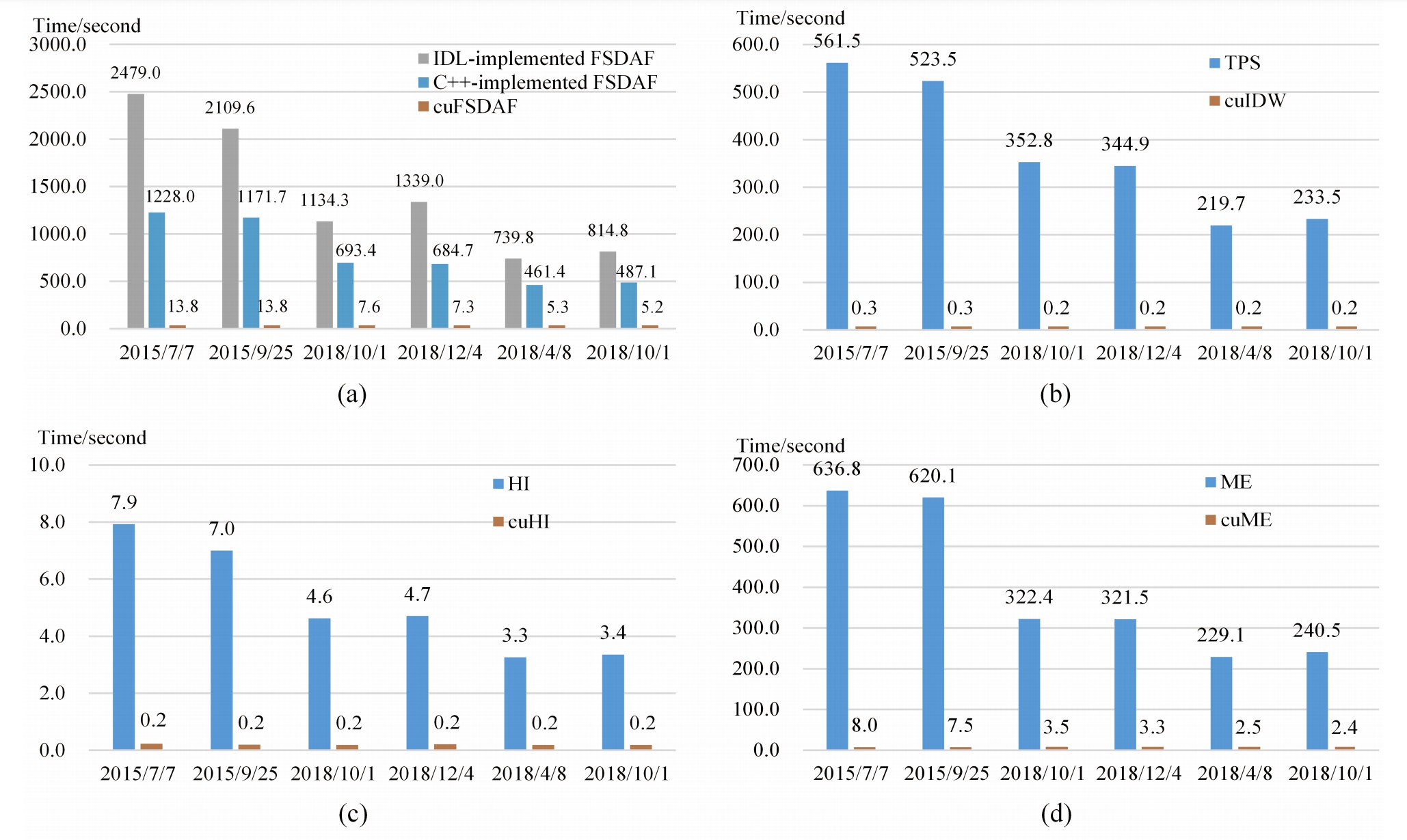

Spatiotemporal data fusion is a cost-effective way to produce remote sensing images with high spatial and temporal resolutions using multisource images. Using spectral unmixing analysis and spatial interpolation, the flexible spatiotemporal data fusion (FSDAF) algorithm is suitable for heterogeneous landscapes and capable of capturing abrupt land-cover changes. However, the extensive computational complexity of FSDAF prevents its use in large-scale applications and mass production. Besides, the domain decomposition strategy of FSDAF causes accuracy loss at the edges of subdomains due to the insufficient consideration of edge effects. In this study, an enhanced FSDAF (cuFSDAF) is proposed to address these problems, and includes three main improvements. First, the TPS interpolator is replaced by an accelerated inverse distance weighted (IDW) interpolator to reduce computational complexity. Second, the algorithm is parallelized based on the compute unified device architecture (CUDA), a widely used parallel computing framework for graphics processing units (GPUs). Third, an adaptive domain decomposition (ADD) method is proposed to improve the fusion accuracy at the edges of subdomains and to enable GPUs with varying computing capacities to deal with datasets of any size. Experiments showed while obtaining similar accuracies to FSDAF and an up-to-date deep-learning-based method, cuFSDAF reduced the computing time significantly and achieved speed-ups of 140.3–182.2 over the original FSDAF program. cuFSDAF is capable of efficiently producing fused images with both high spatial and temporal resolutions to support applications for large-scale and long-term land surface dynamics.

Source code and test data available at https://github.com/HPSCIL/cuFSDAF.

Q.E.D.